A Crash Course in AWS

Introduction: Data science in the cloud

In an age of big data, it’s increasingly convenient and even necessary to do data science in the cloud, that is, using on-demand computing resources that are effectively rented from a provider rather than building and maintaining private computing infrastructure. There are two main reasons that the cloud has exploded in popularity over the past fifteen years. The first of these is maintenance. This includes the raw capital needed to both build and upkeep computing infrastructure but also more abstract considerations like availability and security. Like any utility, computing becomes cheaper at scale, but that scale is beyond the scope of all but the largest and most resourced companies. As a result, for startups and smaller companies, it’s simply more efficient to rent their computing resources from a provider rather than invest in their own. The second reason is flexibility, or the ability to easily scale resources up and down to match the current needs. From a web development perspective, this is often framed as provisioning resources for a server to match the current demand for a website or service. However, elasticity also applies to a data science context where storage and compute needs may suddenly spike due to the acquisition of a TB-sized data set or the one-off training of a large language model.

There are a variety of cloud services from the expected players (Amazon, Google, Microsoft) including some more boutique providers that repackage those services in other forms for specific applications, like Netlify and (formerly) Dropbox. However, Amazon’s cloud computing service, AWS, is one of the oldest and largest and, accordingly, has a vast array of products that cover a variety of use cases, depending on the desired level of control over configuration. I’m not a web developer or data engineer, so I’m by no means an expert on any of these services and the trade-offs between them. I instead come from computational biology and bioinformatics, so my point of view is influenced by high-performance computing.1

As a result, I tend to approach topics in computing from a bottom-up perspective, and in this post I’ll explain what I see as the building blocks of AWS that many of its other services are built on. This is an explanation rather than a tutorial or how-to guide, so I won’t walk through the specific steps for setting up an account on AWS and getting an instance up and running with your favorite data science environment. Instead I’ll focus on how big picture concepts in cloud computing relate to the specific services offered by AWS. Along the way I’ll also link to some user and how-to guides that show in detail how these resources are configured. As a final note, I should mention that I’m not affiliated with Amazon and that my choice of AWS as the topic of this post is mostly a reflection of its popularity rather than an endorsement over its competitors.

The three resource cards

Computing resources in the cloud can broadly be divided into three categories: compute, storage, and network. However, to make this model more concrete for our data science perspective, we can frame these resources in terms of how they interact with data:

- Compute: manipulating data

- Storage: storing data

- Network: transferring data

Amazon offers different services for different needs in each of these categories, and for the most part, they can be mixed and matched, which gives a high (if at first overwhelming) amount of flexibility. In the next sections, I’ll cover the highlights and relationships between each in more detail with an emphasis on applications in data science.

Compute

The basic compute unit in AWS is an EC2 instance. EC2 stands for Elastic Cloud Compute and refers to the ability to provision compute resources called instances on demand. Each instance has a specified hardware configuration and computing environment where the environment includes the operating system and any pre-installed software. You can think of provisioning an EC2 instance as effectively renting a laptop with a clean installation of the desired computing environment. In practice, these instances are often virtual machines running on a more powerful system, but for our purposes we can think of them as independent computational units. The computing environments are built from templates called images, and Amazon has a library of images for launching instances, though there are also options for buying them from a marketplace or creating your own. These images are one method to avoid the time-consuming and error-prone installation and configuration of standard packages every time an instance is launched.2

Many other services are built over EC2 that abstract or automate the details of managing nodes. For example, Lambda is a “serverless” compute service that can execute code on demand in response to events. A Lambda function can automatically run on each file uploaded to a data storage bucket to perform some computation. Of course behind the scenes, a server is spinning up the compute instance and running the code, but these details are hidden from the user. A similar service is ECS (Elastic Container Service), which automates the creation and configuration of containers. Lambda currently has an execution time limit of 15 minutes, so ECS is intended for more intensive and long-running compute needs. One use case is the deployment of machine learning models where every user of a photo storage service needs a unique model for recognizing the specific faces in their library. Since it’s not possible to manually request instances and manage their computing environments for millions of users, ECS offers an interface for managing containers at scale.

Storage

In contrast to its compute services, which are all built with a single fundamental unit, AWS has a variety of storage solutions that differ in their availability, persistence, and performance. The main options for storing unstructured data in arbitrary file formats (as opposed to structured data in databases) are S3, EBS, and EFS. Of the three, S3 (Simple Storage Solution) is the most suited to storing large amounts of both structured and unstructured data, often in so-called “data lakes.” Units of data in S3 are called “objects” which are in turn stored in containers called “buckets.” In contrast to traditional hierarchical file systems, however, buckets are flat and have no internal directory structure. This simple architecture can scale to arbitrary volumes of data, and S3 buckets have no total size or object limits. (Objects themselves, though, are limited to a maximum size of 5 TB.) Data in S3 is also easily available, as objects can be accessed through web interfaces or queried directly in place as a database with Amazon Athena. Finally, S3 offers storage tiers which differ in their access time guarantees. The least available, Glacier Deep Archive, can have retrieval times of up to 48 hours and is intended for data which is rarely, if ever, accessed.

The remaining two services are more analogous to the kinds of storage used with a personal computer. For example, EFS (Elastic File System) is a hierarchical file system that can store files in a tree of directories. Additionally, EFS file systems can connect to thousands of EC2 instances, allowing it to act as a shared drive. As a result, EFS file systems are likely the best options for collaborative analyses of large data sets since team members can directly access and manipulate common resources. The downside is that mounting EFS file systems on EC2 instances requires slightly more configuration. The EC2 instance launcher fortunately has options that will automatically mount an EFS file system. In cases where a more programmatic approach is necessary, though, the following guides demonstrate how to mount an EFS file system through a command line interface one time and on reboot.

Finally, EBS (Elastic Block Store) resources are like flash drives which can be attached to EC2 instances. EBS volumes offer the lowest-latency access across all AWS storage options. They also persist between connections, unlike instance “stores” which are erased even when their instances are temporarily paused. As a result, EBS blocks are best for high-performance applications with persistent data. However, unlike EFS file systems, EBS blocks come in fixed sizes and have greater restrictions on the number of EC2 instances they can simultaneously connect to, so they are a less flexible option for shared file storage.

Network

Everything in AWS happens over interconnected computers, so effectively managing communications between the various services requires understanding the basics of these networks and their relationship to the organization of Amazon’s physical computing infrastructure. Accordingly, this section has three parts. In the first, I cover some networking fundamentals. Then in the second, I dive into specifics of the AWS physical infrastructure. Finally in the third, I discuss some details of configuring network settings in AWS.

Networking basics

A basic goal in networking is transferring data between devices, and much like how letters are sent between physical addresses, network information is sent between network addresses. There are a few types of these addresses depending on the scope of the network, but IP (Internet Protocol) addresses are used for communications over the global network of computers called the Internet. The basic idea is to give every device connected to the Internet a unique label in the form of an IP address, though in practice it’s a little more complex. For now we’ll ignore those nuances and instead focus on the structure and organization of IP addresses, which come in two flavors: IPv4 and IPv6. Currently the internet is in the process of transitioning to IPv6, but IPv4 remains widely used, so we’ll start there.

IPv4 addresses are 32-bit integers, commonly represented as a sequence of four bytes in decimal-dot notation. For example, 01111111 00000000 00000000 00000001 is the same as 127.0.0.1. IP addresses are allocated in blocks to providers where high bits refer to a block of related addresses (network address), and the low bits designate a specific device (host address). (Because devices on the same subnetwork are typically close in physical space, this hierarchical organization improves the efficiency of routing requests.) This division between the network and host portions of the address is given in CIDR notation, which is an extension of IPv4 decimal-dot notation. The number of bits, n, that designate the network address portion counting from left to right is indicated with /n appended to the end of the IP address. For example, 198.51.100.14/24 represents the IPv4 address, 198.51.100.14, and its associated network prefix, 198.51.100.0. CIDR notation can also compactly communicate a range of IPv4 addresses, e.g., a.b.0.0/16 means all addresses from a.b.0.0 to a.b.255.255.

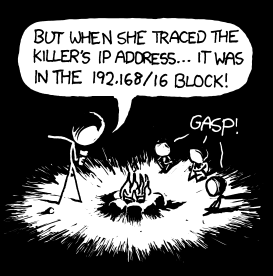

Some IP addresses are reserved for special network locations. For example, the first address I mentioned, 127.0.0.1, is the “localhost,” meaning the current machine. While it may seem strange to access your own machine via a networking request, it’s extremely useful for debugging purposes or even using services which can be hosted both locally and through a network, e.g., Jupyter notebooks. IPv4 reserves the entire block 127.0.0.0/8 of over 16 million addresses for loopback purposes, but often only the previous one is supported. For convenience, in many browsers the name “localhost” automatically resolves to the designated loopback address internally. Another example of a reserved block is 192.168.0.0/16, which intended for local communication, so household routers often have an IP address in this range. An added benefit of knowing this is you should now get the following xkcd comic, if you didn’t before.

The joke is the killer is in the house, that is, the local network.

The 32-bits in IPv4 only offer about 4.3 billion unique address, so with the rapid growth of the internet, the last have blocks of IPv4 addresses were allocated throughout the 2010s. This was fortunately anticipated many years in advance, prompting the creation of a second addressing scheme called IPv6. It’s conceptually the same except addresses are 128-bit integers, represented as a sequence of eight groups of four hexadecimal digits separated by colons, for example, 2001:0db8:0000:0000:0000:ff00:0042:8329. It has its own syntax for abbreviating this representation and a few other differences that I won’t go over here for brevity, but it’s important to recognize IPv6 addresses since they will become increasingly common in the coming years.

The final pieces of the networking puzzle are ports, which allow devices to route network communications to the correct processes. If it at first may seem excessive to require another layer of addressing after the data reaches its intended device, it helps to consider the perspective of an operating system managing the network communications of various processes. For security and efficiency, processes don’t directly control networking but instead use the operating system as a middle man. Port numbers, then, are essentially internal addresses that operating systems use to route streams of incoming information from the network to the correct endpoints. I say endpoints in plural because different processes can listen or “subscribe” to a single port. For example, the email protocol IMAP is assigned port 143, so multiple email clients can listen on this port to have the operating system pass them data tagged with this value. Port numbers are appended to the end of an IP address and separated with a colon, so 127.0.0.0:143 indicates a channel for IMAP messages on the localhost.

AWS infrastructure

Though the internet can feel like an intangible place that is somehow both everywhere and nowhere, ultimately the information beamed across the web does exist in a physical location. In Amazon’s infrastructure, these are data centers organized into units called Availability Zones (AZs). AZs are the base unit of the AWS infrastructure, and though they may be composed of separate buildings, they are physically close and effectively operate as one. Regions form the next organizational level and consist of at least three isolated and physically separated AZs in a geographic area as shown below. They are, however, connected with low latency, high throughput network connections, so communication within a region is much faster than between regions.

Regions contain at least three availability zones which in turn may be composed of multiple data centers.

In many cases, it is possible to provision resources, such as EC2 instances or S3 buckets, in specific regions. Prices vary across regions, so some resources are cheaper in certain regions and more expensive in others. However, access times also depend on the physical distance between a server and a device requesting a resource, so applications requiring low latencies may need to run in multiple regions simultaneously. On the other hand, as data transfer charges are typically less within AZs or regions, consolidating resources within a single region can reduce costs. All these considerations depend on the exact application in question, so making generalizations is difficult. Fortunately these decisions are usually the responsibility of data architects and engineers rather than scientists. However, a basic understanding of the AWS physical infrastructure is essential for properly configuring the network settings of various resources, as I discuss in the next section.

AWS network configuration

AWS resources exist in a logically isolated virtual network called a Virtual Private Cloud (VPC) which gives users fine-grained control over their networking configuration. Each VPC in turn contains subnets spanning a range of IP addresses where individual addresses correspond to AWS resources, e.g., EC2 instances. While VPCs and subnets are networking abstractions, the underlying physical infrastructure does constrain their organization. For example, VPCs exist within regions, and subnets exist within Availability Zones. In addition to VPCs, AWS has another concept for controlling network traffic called security groups, which are sets of rules that specify the kind of traffic allowed to and from a resource. These rules can specify the address, port, and protocol for outbound or inbound communications, and any traffic not matching those patterns is rejected, effectively acting as a firewall. Though it at first sounds complex, the relationship between these concepts is fairly logical and hierarchical, as shown in the following diagram taken from the AWS documentation.3

VPCs are virtual networks in regions that allow users full control of their networking and security configuration.

To me, security groups are the biggest gotcha when configuring AWS resources since not applying the correct security groups will block the desired kind of traffic. For example, to connect to EC2 instances over SSH, they need to be launched with a security group that allows SSH communication from the desired IP addresses. Otherwise, the requests won’t receive a response and will eventually timeout. The EC2 launcher contains a menu that specifies the security groups of the instance, even allowing the creation of a new security group on the fly. However, it’s best to create security groups in advance for common configurations to avoid the proliferation of groups with identical rules. Since security groups can apply to multiple types of resources in the AWS ecosystem, the menu for managing them is found under the VPC Dashboard rather than the EC2 Dashboard or Console Home.

Conclusion

Even though this likely felt like a firehose of information, I’ve only scratched the surface of what AWS has to offer. Fortunately, its documentation is generally high quality and very detailed. However, as is often the case with documentation, it’s easy to miss the forests for the trees, especially when first getting started. Hopefully then, this post has clarified the big ideas of AWS (or any cloud provider), allowing you dive into any of the topics in greater detail on your own!

Cloud and high-performance computing overlap significantly since the latter generally involves requesting and configuring computing resources over a network. HPC, though, more specifically refers to computationally intensive applications where the work is distributed over clusters of individual computing units called nodes. ↩︎

An alternative solution is using containerization software like Docker or Singularity, which is less prone to vendor lock-in. ↩︎

Local Zones are an additional piece of infrastructure that I hadn’t previously discussed. They’re an extension of regional AZs which are designed for applications that require single-digit millisecond latency, meaning they operate closer to their end users and at higher prices. ↩︎